Prior to designing and iterating on possible solutions for this problem space, I performed seven early research activities to establish project requirements and to better understand my stakeholders, including trainers, curators, veterinarians, managers, visitors, and the sea otters themselves. These activities included:

I. Semi-structured interviews with four trainers and one associate curator on the mammal and bird team to learn about the trainers, the otters, and the aquarium. These consisted of around 15 open-ended questions, and I would often ask "could you tell me more about that?" when I felt it necessary to gather more information from participants.

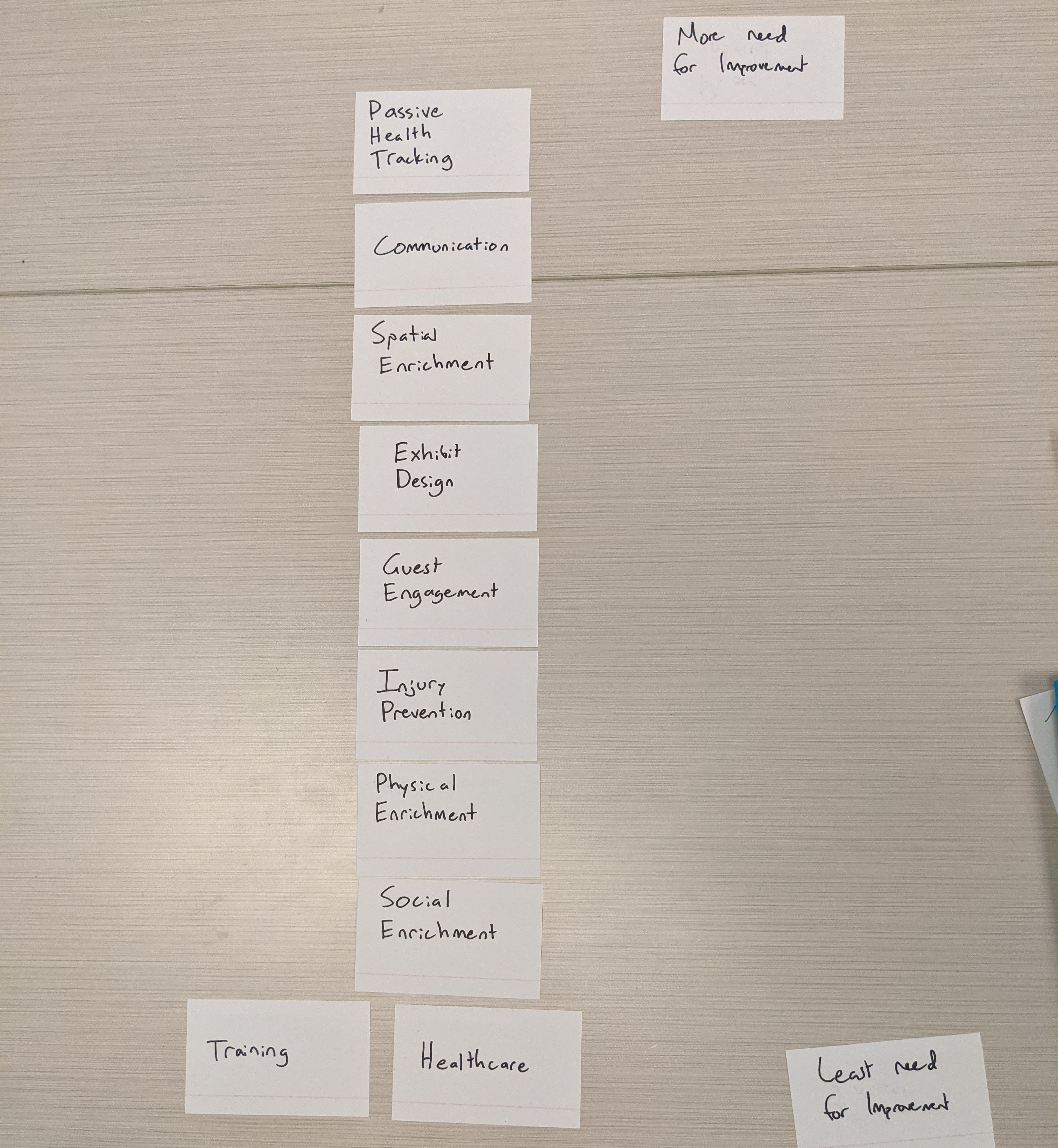

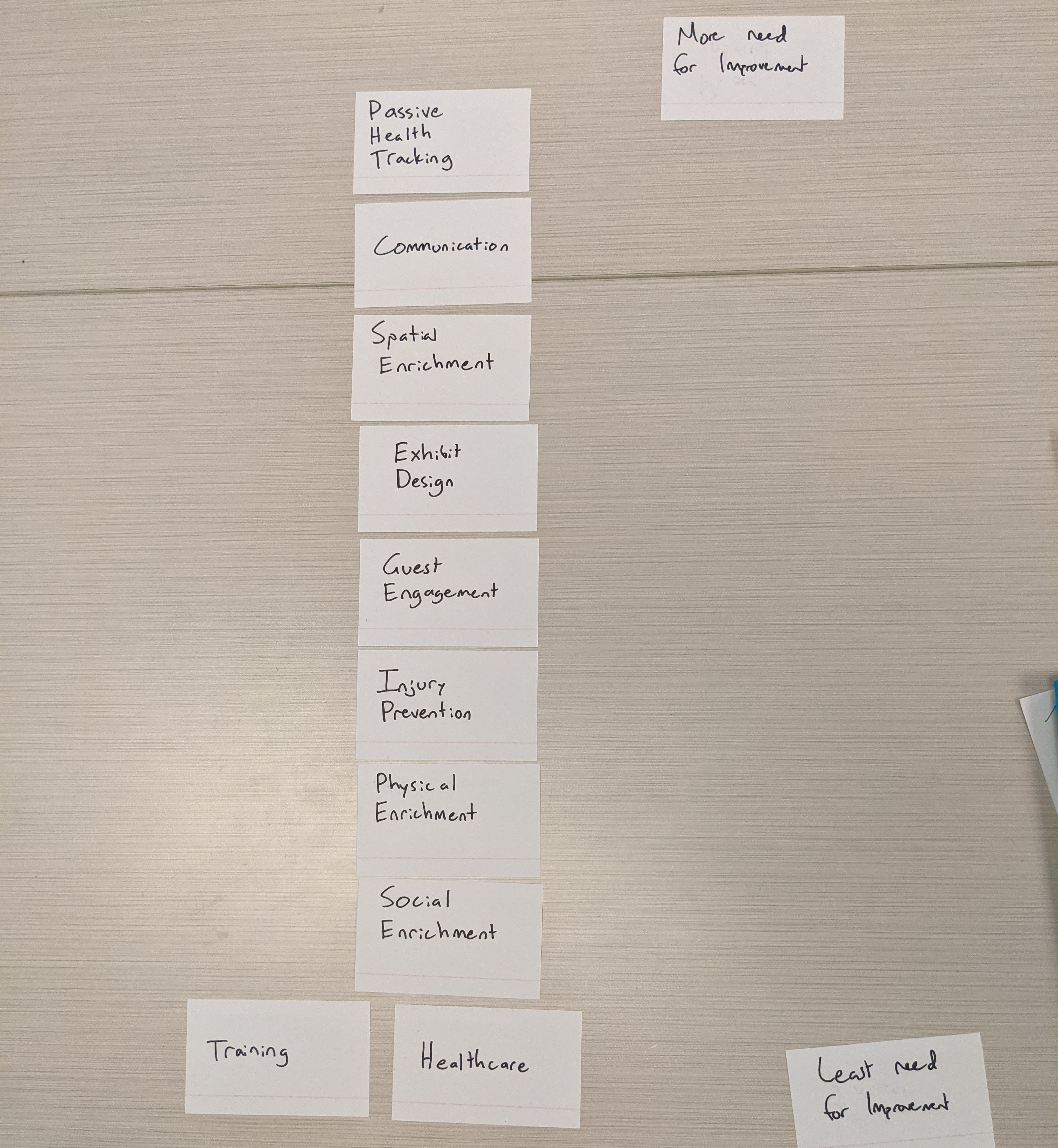

II. User-Value Ranking of different project spaces with the trainers and curator interviewed. I asked participants to rank their choices in project space first by how interested they were in each project space, and then by how strongly they felt the aquarium would benefit from a project in that space.

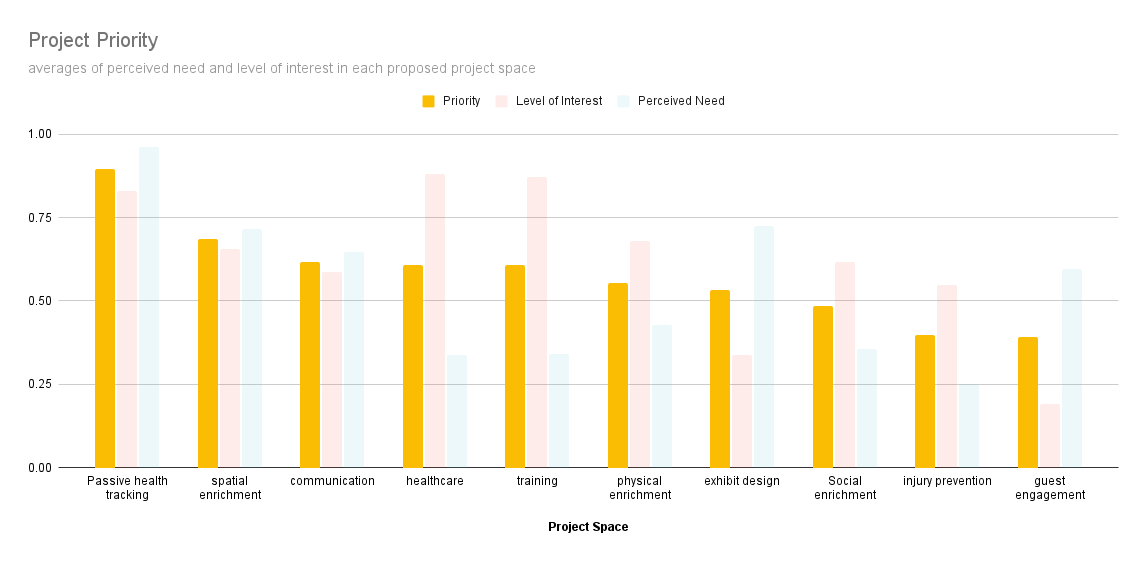

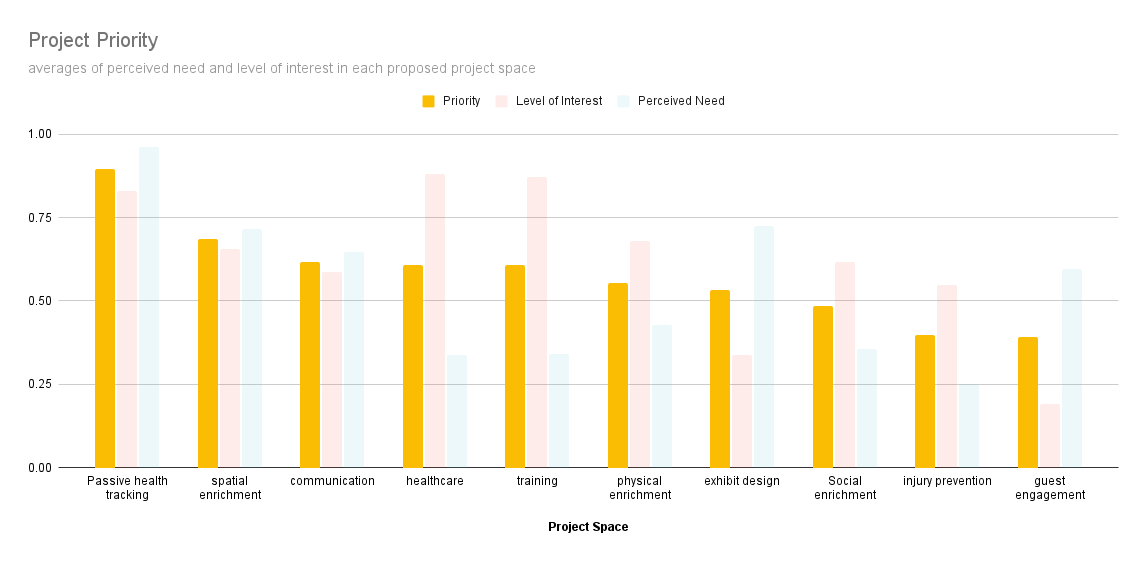

III. Data Visualization of the above user-value ranking results that would later be presented to the entire mammal and bird team when deciding next steps in what specific project idea to pursue.

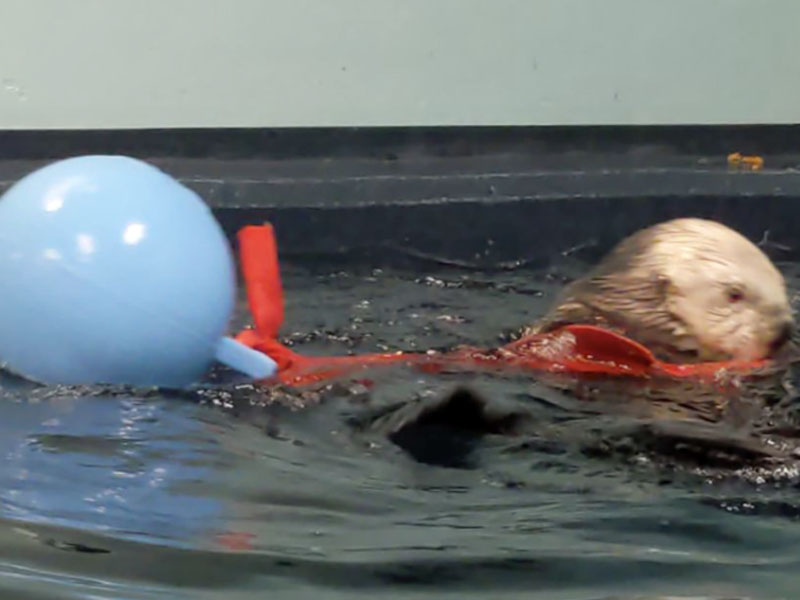

IV. Observation of the sea otters both on display and in back of house in their habitat to better understand how they interact with toys. This also helped me gain an understanding of where aquarium visitors spent most of their time, and what they did while viewing or passing by the sea otters.

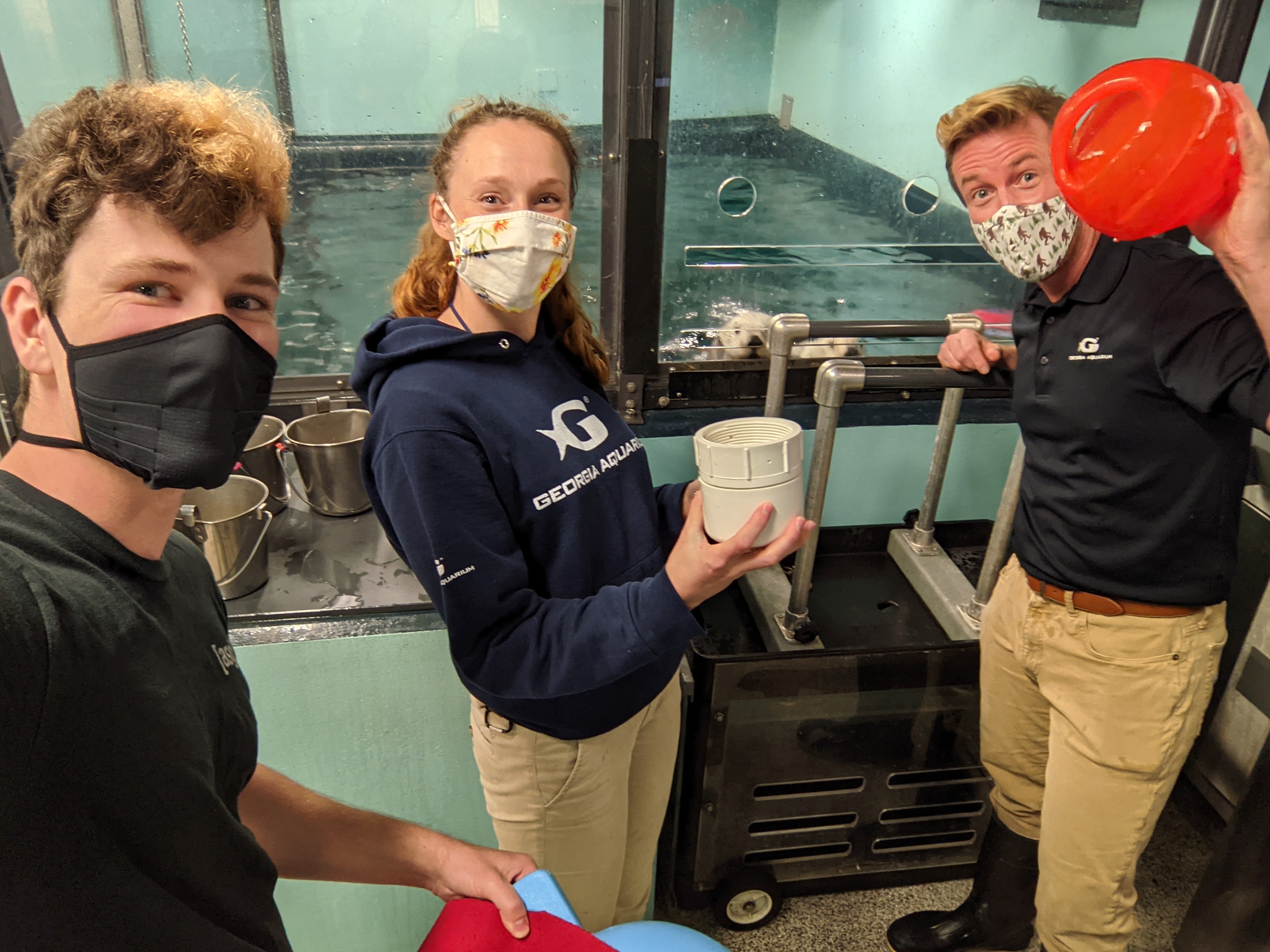

V. Usability Testing of Low-Fidelity Prototypes of otter toys. By designing unconventional enrichment devices for the otters, I seeked to discover how they interacted with novel and unfamiliar enrichment devices.

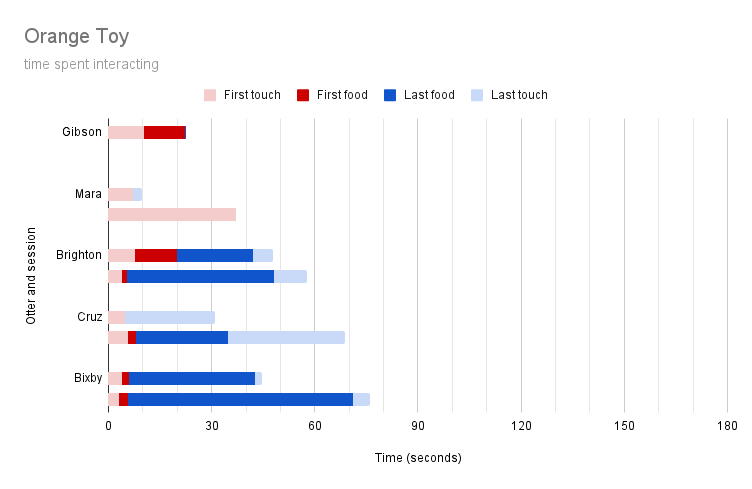

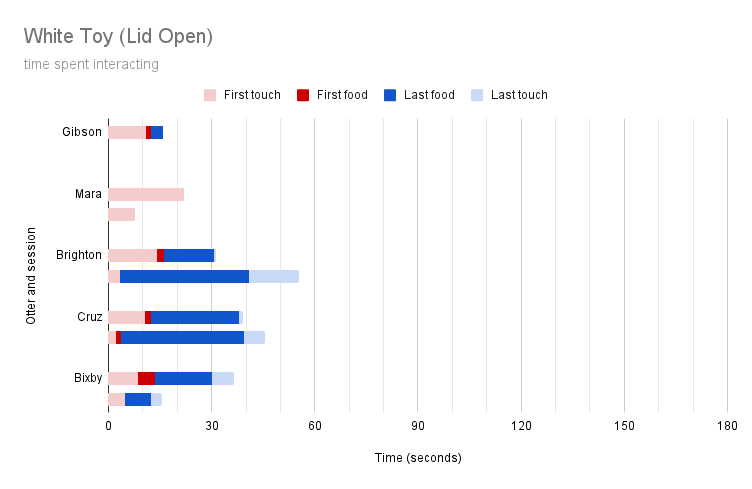

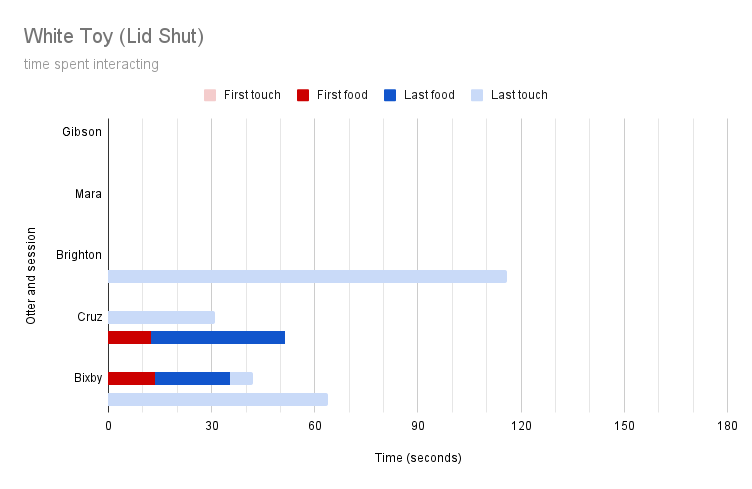

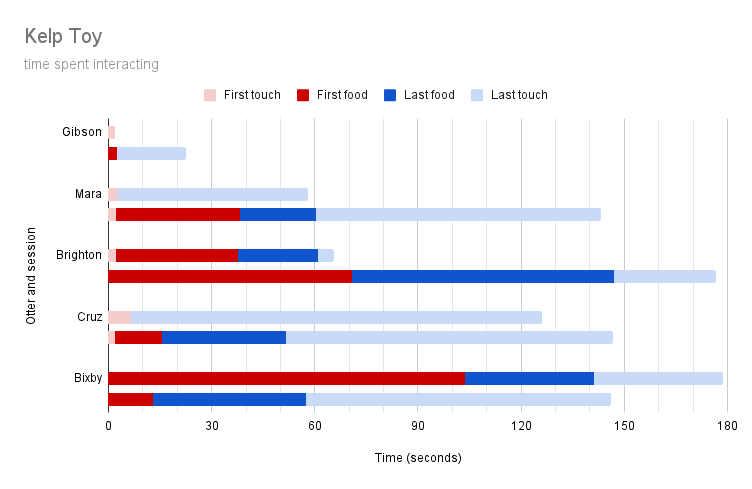

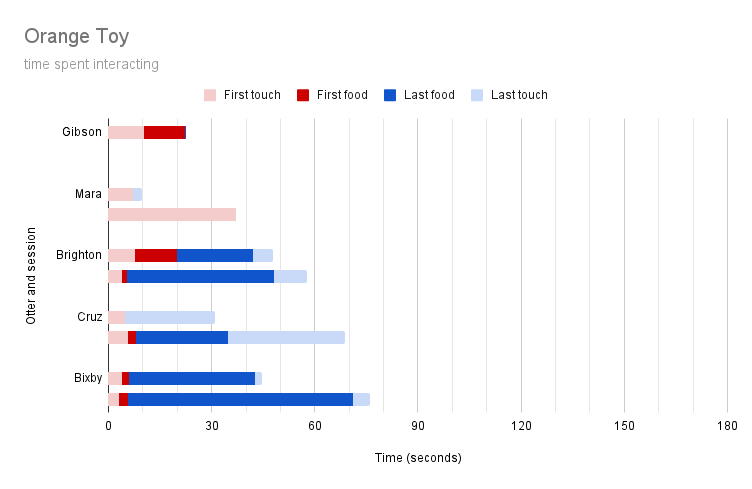

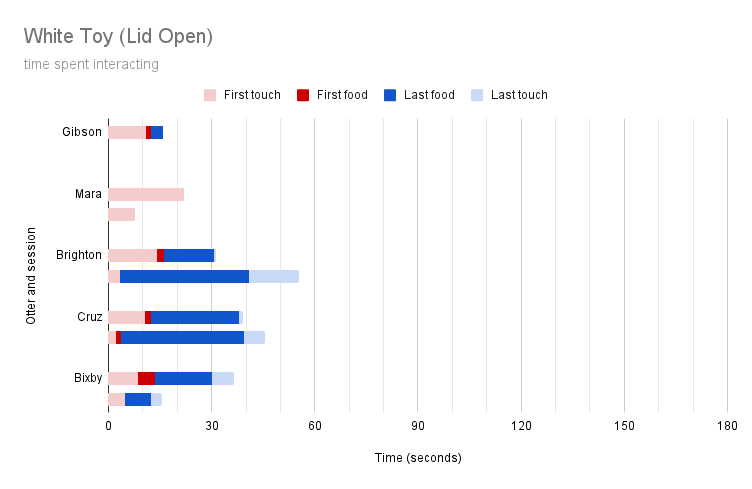

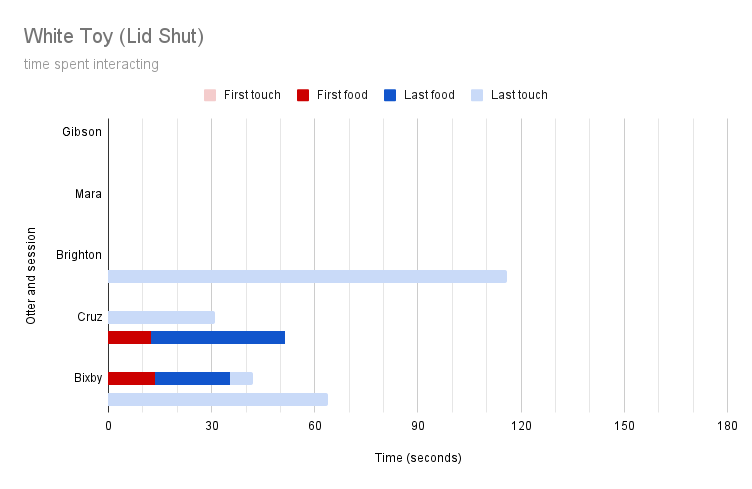

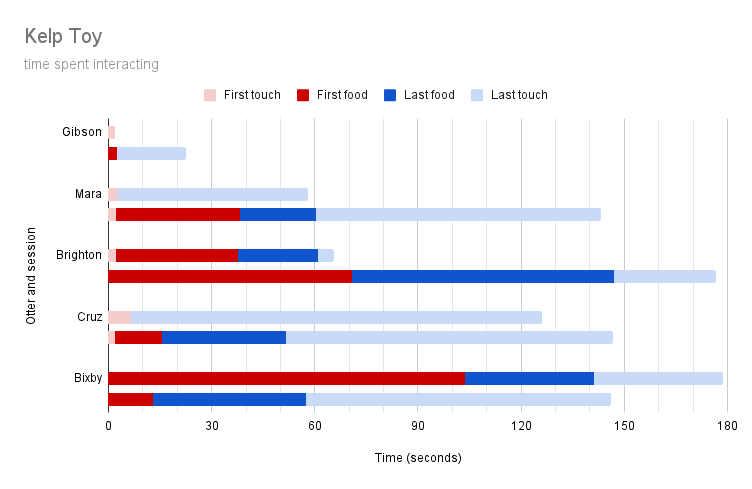

VI. Data Visualization of the above low-fidelity prototype testing sessions to present to stakeholders and provide evidence for which next steps we should take when designing physical prototypes.

VII. One Participatory Design Session with the entire mammal and bird team to establish for sure which project space would be most beneficial to hone in on in the second semester of the project.

The above images are photos taken from one participant's user-value ranking session. Some project topics, such as training and healthcare, were interesting but not needed. Some project topics, such as exhibit design and guest engagement, were fairly needed but not interesting. And some project spaces, such as passive health tracking and spatial enrichment, were both fairly interesting and needed in this trainer's opinion.

I then normalized these findings on a scale from 0 to 1 for each trainer, and averaged those results in the below graphs.

I generated graphs with the averages of each participant's responses normalized between 0 and 1 to better understand how trainers and curators felt about each project space. Based on the lowest graph above, passive health tracking and spatial enrichment were both the most interesting and most potentially beneficial to the sea otters of all of the projects proposed.

To gain a better understanding of what sea otters perceive as signifiers and affordances within the context of enrichment devices, and to inform the design of future high-fidelity prototypes, I designed, built, and tested four low-fidelity prototypes for the five sea otters to play with across two sessions for a total of 40 trials. These devices were unique in that they were all novel to the animals, they all had food secured in them in different ways, and they all required modes of interaction rather than smashing or biting to retrieve food.

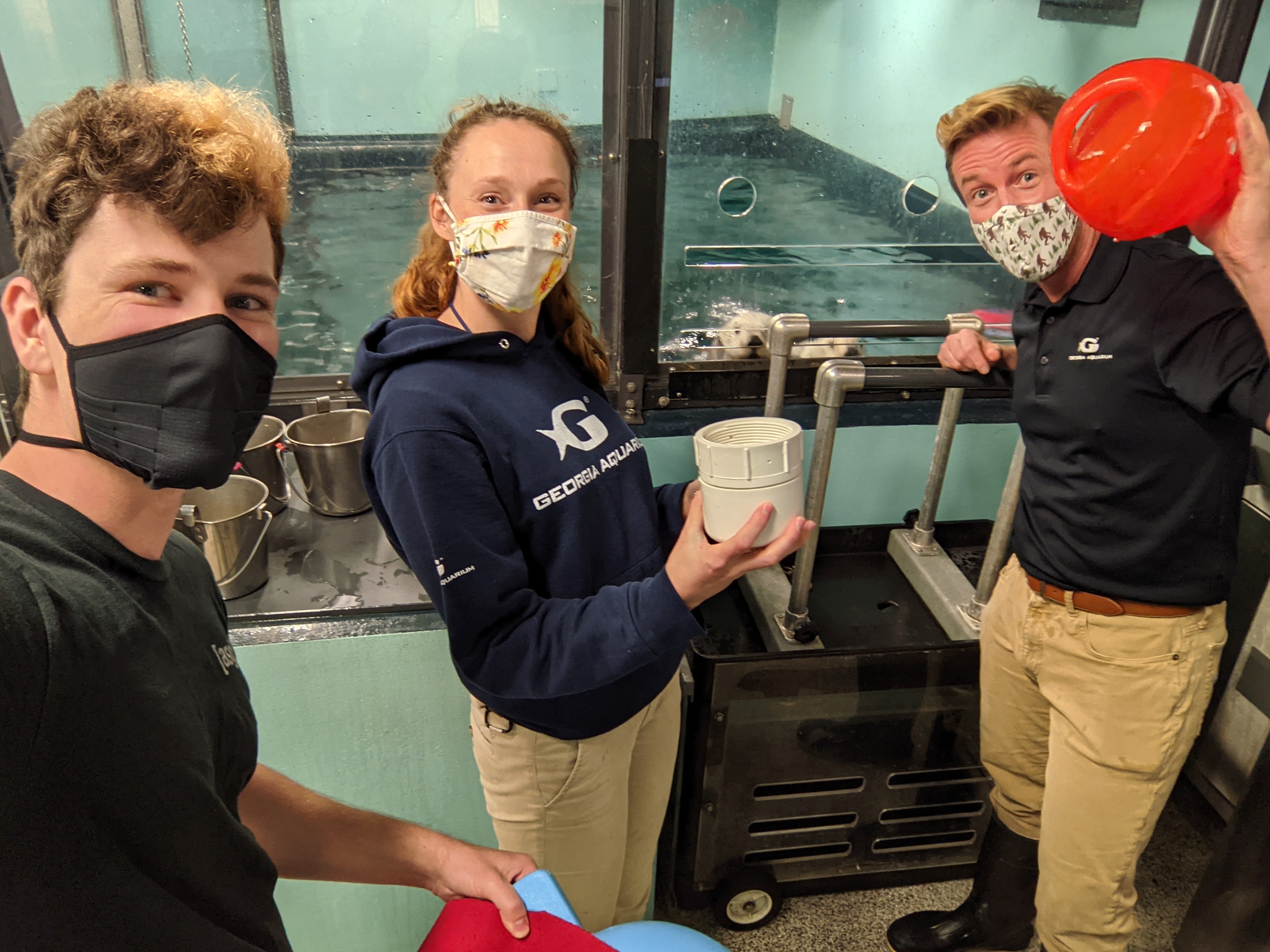

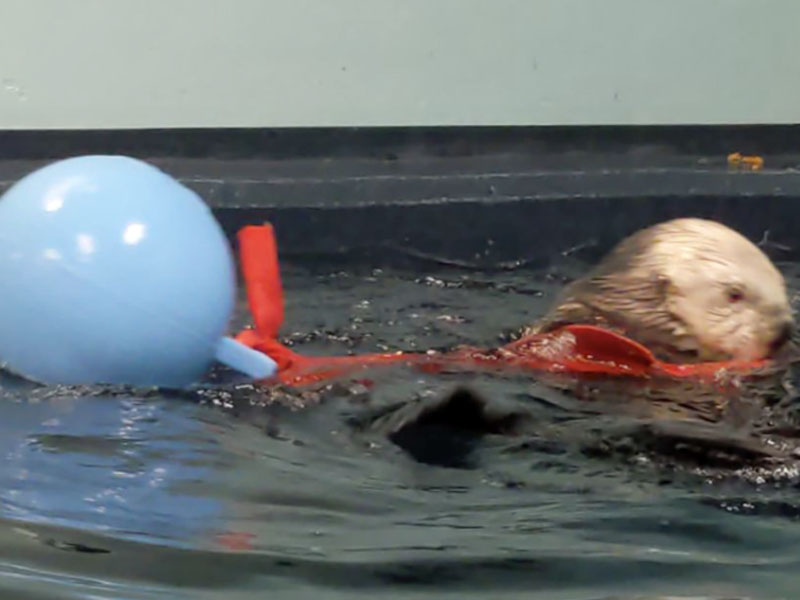

The above four images are the four low-fidelity prototypes that I used to identify how sea otters understand and interact with toys. These prototypes were an orange toy with a slit that could be opened by pressing, a white open toy that food could be retrieved from by grabbing, a white closed toy that needed to be unscrewed to be opened, and a kelp toy with food tied in a square knot at the end of a piece of car wash felt tied to a jolly ball. In these usability testing sessions, otters were presented with a toy stuffed with food. Sessions were timed and recorded, and certain key moments during these sessions were marked and visualized: first touch (when the otter first touched the toy), first food (when the otter first begins retrieving food from the toy), last food (when the otter stops getting food from the toy or the toy no longer has food in it), and last touch (when the otter stops touching the toy).

From the above low-fidelity prototype testing sessions, I derived eight key takeaways:

I. The kelp toy was most effective at soliciting interactions from the sea otters, but that is not necessarily indicative of the prototype being most effective for health tracking, as ideally the sea otters will want to give the toy back to trainers in exchange for food. Furthermore, the kelp toy would by far be the most complex to instrument with computational elements and maintain for prolonged use by a nontechnical audience without the regular intervention of technical experts. Therefore, this design would not be effective in the long run.

II. Gibson and Mara were anxious about new enrichment devices, but they gradually warmed up to the kelp toy across the two different sessions. In combination with feedback from trainers that these two sea otters were the youngest and could do with desensitization to new enrichment devices, we can assume that they would similarly warm up to other new enrichment devices over time.

III. None of the otters were able to retreive all of the food from the orange toy. While all but one of them were able to retreive some amount of food from it, they did not learn during the 40 sessions how to press the toy to empty it of all of its held food. The sea otters also did not exhibit any measurable banging, smashing, or biting behaviors on the toy; therefore, similar to the kelp toy, this design would not be effective in the long run.

IV. The white open toy was the quickest for the sea otters to interact with, and they were able to retreive the food from it with relatively little difficulty. However, similar to the orange toy, they did not exhibit any measurable smashing, banging, or biting interactions on the toy, so this would not be effective in the long run.

V. The white closed toy solicited the most measurable behaviors from the sea otters (smashing, banging, biting, grabbing), but Gibson, Mara, and Brighton were somewhat nervous around the toy and did not exhibit many interactions. Since the otters warm up to new enrichment devices over time, and since this device encouraged otters to exhibit the most measurable behaviors, I designed future prototypes with this mode of interaction in mind.

VI. Otters assume food will be in the center of mass of a toy. When given the kelp prototype, they all began to search for food by looking at the jolly ball rather than the square knot of kelp containing food. Therefore, the final prototype should have food in its center.

VII. Otters look to any openings in toys for food first. When looking at toys, they will aim to spend as little energy as possible retreiving food from it, which often means reaching into any openings in the toy to search for food. Therefore, the final prototype should have some opening in it that is large enough to visibly indicate the presence of food, but small enough for otters to not be able to simply reach in and grab the food. Alternatively, the final prototype could be designed to be packed densely with snow to form a plug, preventing otters from just reaching in and grabbing the food.

VIII. If an otter thinks food is in something but cannot see that food, it will attempt to smash it open. This occurred with all of the prototypes except for the white open toy. Therefore, the final prototype should be designed in such a way that signifies the presence of food to the otters, but does not have food immediately ready for the taking. The prior point of stuffing the toy with food and snow to form a plug would be an effective means of accomplishing this.

.png)